Keeping a VPAT up to date

Your team has published your first Accessibility Conformance Report or VPAT. Hooray! But now what? If your team works in an agile style, that system is going to keep getting updated and changed. How do you make sure it is accurate and up to date? While it isn’t a prescriptive solution for every team, this is an exploration on how our team working on the Space Force portal is iterating on our VPAT with each release.

checklist for VPAT update with new release in Truss USSF team task tracking

Select a sustainable cadence

On my team working on the Space Force Portal, we change what is in production to our end users using releases. This means that new features come out in batches. When determining our cadence for VPAT updates, we decided to utilize this way of structuring our process as a good scaffolding to determine a good VPAT update cadence. Our releases go out every 4–8 weeks or so, which makes the amount to be tested each time is relatively small and under a day of work.

Chart showing what kind of testing takes place at which stage of the development process for the Space Force portal team

We do different levels of accessibility testing at different stages of the development process. We do a lower fidelity level of manual testing using ANDI and keyboard checks for each PR, and do a higher fidelity, by the books Trusted Tester run through for each release.

Your toolkit & process

A good next step is to decide what tools and processes your team wants to use to create your report. Different things might make sense depending on the size of your system and the scope of what you test. You may be doing the testing work yourself, or hiring an outside consultant to do the testing for you. This can be beneficial as many of these organizations have real people with disabilities working for them to conduct the audits. This leads to less confusion and speculation on whether or not something “passes” or not.

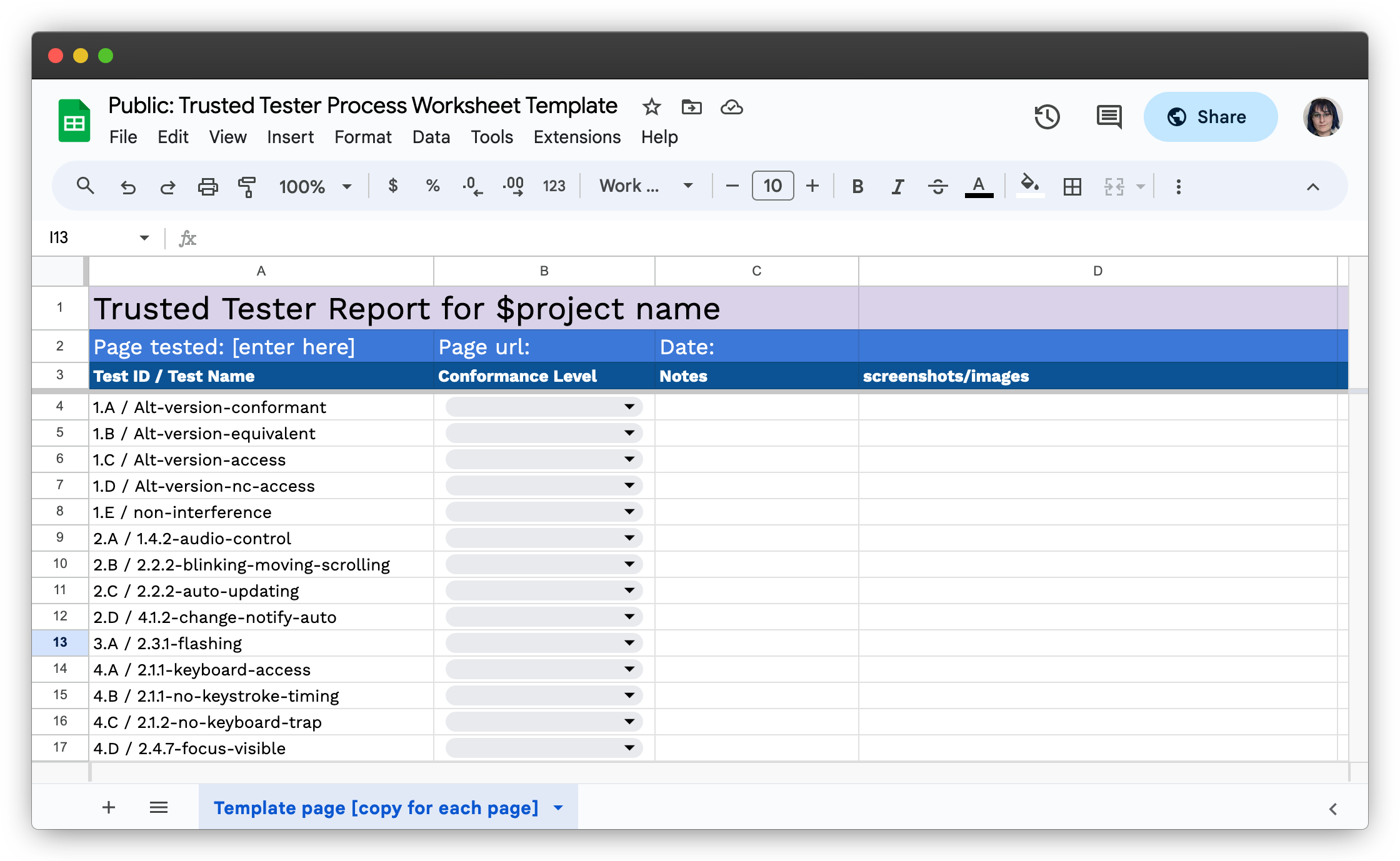

On my team, we are at present doing the testing ourselves so we have to plan it out a bit more. Our system is about sixteen main pages, so tracking all the Trusted Tester IDs for each page in a spreadsheet is reasonable and helps break the task up into more manageable parts. If your scope of pages is larger than that, you may need something more robust. If your system is smaller, something less robust may serve you better. Generally, we scope release testing to pages and components that were changed and added in the release. We look through the release notes to determine that scope.

That spreadsheet helps us populate our data from all the pages into ACRT to generate our report. ACRT has a feature that allows us to load the JSON file from our most recent report (presumably from our most recent release) that we can use as a starting point, so we are editing the data as opposed to starting fresh each time.

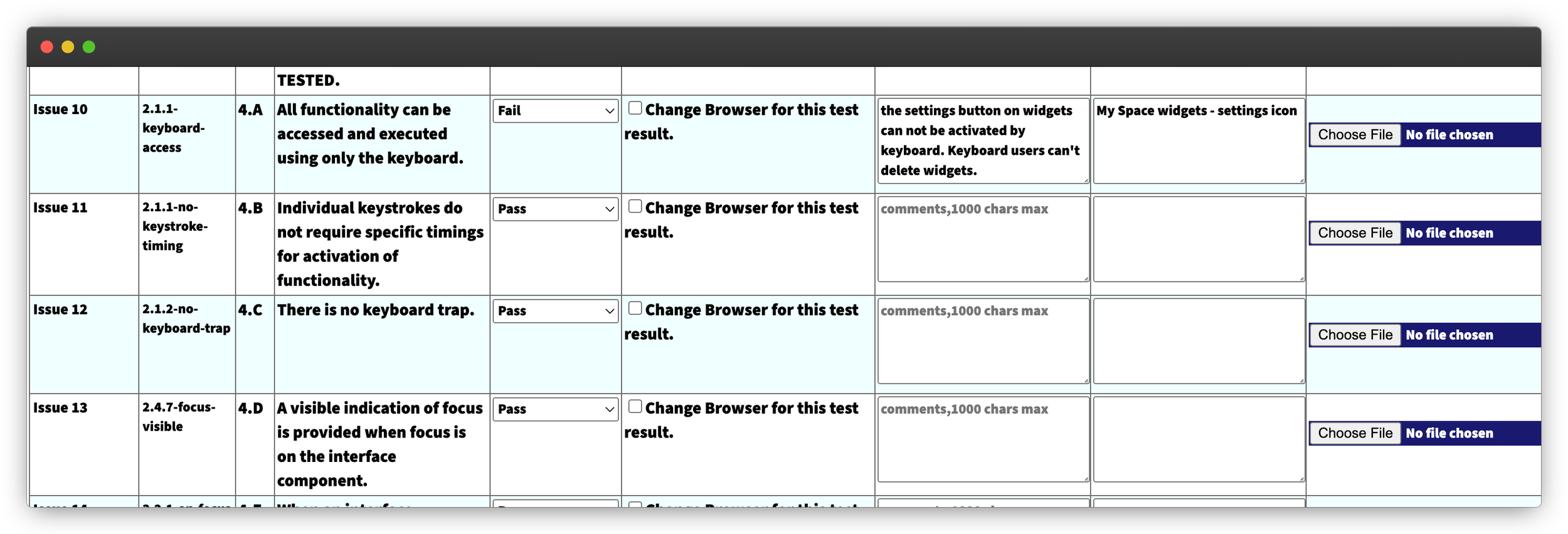

From there, once the spreadsheet is updated with data from the more granular page-by-page tests we run through each page to determine whether we need to report a failure for a particular test ID. If the test ID passes on every page, we can mark it as passing, if there is one failure, we add that to the ACRT row for that test ID. If there are multiple failures we add another line for the same test ID in ACRT. That way, when we go to update, we can delete those rows out when a single issue is resolved.

What it looks like to enter accessibility issues into ACRT

This approach is not one size fits all. Depending on the scope of your work, the industry you work in, and the frequency of your VPAT updates, this process might look different. You might use different tools, but the principle of iteration remains the same.

Sharing & socializing the report

Doing accessibility conformance reporting is a requirement in many cases, so it is always best to speak with stakeholders and ask what their needs are in terms of obtaining new reports when they are issued. Different government agencies and organizations have differing requirements for where they like to log and keep reports to reference.

We consulted with our Section 508 Program Manager associated with our agency’s parent org to determine what they needed for our project to be adhering to their requirements. We have to email our reports as they come out to a specific person for them to go where they are meant to.

Outside of what is required, we keep track of our reports in our own cloud storage to ensure it is always available to anyone on the team who would like to reference it. Accessibility does not occur in a silo and making information available to anyone on the team assures that the information is not lost and it is used and prioritized in our work.

Space Force team accessibility directory, showing current VPATs and a directory where old ones are achived

We also share the report in our project slack channel to highlight that a new report is issued, as well as sharing any key wins our team has published in our most recent release. It is a juncture point to celebrate our accomplishments and keep the conversation open about how the choices we make as we work can impact the accessibility of the platform.

Growth journeys are not always linear, and from time to time we do find new issues that have arisen in the last release cycle. That is not a bad thing, and it’s why we do the extra release level platform testing. This can also happen if we happen to do a usability test with a person with a disability during the time period. These folks are generally much more efficient at catching usability issues for assistive technologies that our manual tests as more typical users might not catch. This is an opportunity to also share our new findings and remediation tickets we can add to the backlog to address in a future release.

Prioritizing remediation work

The final step in this cycle is making sure the findings that are captured get added to the backlog so they are addressed in future work. We take all the issues we find across all the pages and Test IDs and move them to a front page of our spreadsheet called “Findings” which provides our product manager with the information needed to add new tickets to our backlog.

These tickets are then added to future sprints, and tickets with smaller scope are also available for engineers to pick up if they wrap up their sprint work ahead of schedule. Those findings then can make it into a future release, helping us to continue to improve the portal’s accessibility experience over time.

All these stages feed into each other, creating a cycle. We hope to work prevention-first in our development process, but work to make sure there is a process for the things that slip through the cracks. Without a path to address accessibility issues, they won’t get remediated.

Next steps

This can be a somewhat unsatisfying part of working in an agile methodology, that as long as your team continues to work on your product, that you’re never “done” testing and reporting your accessibility progress. After one release goes out (or whatever cadence your team selects), this process starts all over again for our next one once the time has elapsed or the event that goes with a report update occurs.

Additionally, if your team really, truly, gets to a point where they have met all the WCAG standards and level that is defined in your accessibility target, it is time to see how you can exceed it. In the realm of government tech, we are most of the time held to Section 508, which requires WCAG 2.0 at the AA level. This particular version of the standard came out in 2008, and does not consider modern technology such as smartphones and touch screens as well as it could. This is a huge limitation of Section 508, which is why it should not be any team’s exclusive measure of success in accessibility.

This is a good point for your team to start working your process towards WCAG 2.1, which many other governments around the world are already using. Just last week, the standards for WCAG 2.2 were released, which has some helpful guidance for better practices around interactions such as dragging. This is especially important to consider if you hope for users to access the system from devices aside from desktop computers, and should be considered even earlier if so.

Even if your team has been working towards the more modern WCAG standard, work together to identify opportunities to work towards the AAA level if you have not already.

Even better, if you aren’t already, conducting usability testing with users that have disabilities is going to be an even more effective way to identify issues in your system. This measure of success is much more meaningful that trying to align to an arbitrary list of standards that a (very smart, helpful, and thoughtful, I may add) group of people who never met you or your users wrote.

Like any time we try to build a good user experience, the best way to see if you are building an accessible product, is to give real people the chance to test it out and show you. Even if you are testing manually with screen readers and assistive tech yourself, a someone who uses this technology as their primary means of using your product is going to be far better equipped to evaluate its accessibility than you are if you are not someone with a disability yourself.

Conclusion

Everyone's process is going to look different based on their needs and resources, and you can adapt it as you iterate through subsequent releases. An example of a way our process has changed over time, is we broke up our reporting structure into our CMS and client apps separately to make the data a bit easier to manage and view. In our current scope of work, much more changes are being made to the client app, and scoping the report to just that side of the work has made updates easier.